Artificial Intelligence (AI) is all the rage nowadays. From simple pattern recognition to complex tasks such as weather prediction, a large variety of applications claim to be improved by using artificial intelligence.

The field of artificial AI is several decades old but is only now gaining widespread relevance due to digital proliferation and ubiquitous computing. AI has increased in relevance as organizations navigate the complex world of cloud and edge infrastructure. Using AI to harness historical data to predict future system behavior is the most sought-after business value. In this article, we lay out the basic underpinnings of AI, their meaning, relevance, and use cases. Solutions that make AI accessible to device makers and product organizations are also delineated.

First a few terms

The AI world is full of terms that can be overwhelming for the beginner. While the terms themselves have existed for decades, their meaning and interpretation are sometimes fluid and need definition.

- Algorithm – An algorithm is a set of instructions that a processor can execute. Most software programming involves devising sets of algorithms to perform tasks. The complexity of an algorithm depends on the complexity of each step that needs to be executed, and on the number of the steps. Most algorithms used are simple (ex: if-then statements) and have specific rules-based actions assigned for execution.

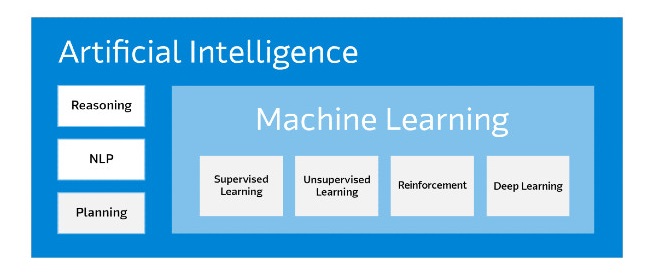

- Artificial intelligence (AI) – AI is often defined as a system's ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and perform tasks by adaptation. AI can for example use historical data to develop a model to predict future data. In addition, AI can learn from new data and adapt the model to predict future data more accurately. To learn and adapt, AI employs machine learning techniques.

- Machine Learning (ML) – Machine learning involves mathematical approaches that enable computers to learn from data and perform tasks without being explicitly programmed to do so. There are many learning approaches employed depending on the type of data available and the complexity of tasks that need to be performed.

- Supervised Learning involves training an algorithm by first using known input and output datasets. The data is pre-classified and sorted and the data is used by the algorithm to learn the general rules that relate the inputs to the outputs. Once the algorithm successfully predicts known outputs with minimal error using known inputs, it is trained and can be used to predict outcomes with unknown inputs.

- Unsupervised Learning is used when the underlying data has no pre-identified pattern or relationships. Essentially the data is not labeled, leaving the system to identify patterns and relationships between inputs on its own. Discovering hidden patterns in data and extracting features within the data are key purposes of unsupervised learning approaches.

- Reinforcement Learning is a kind of training where the algorithm is directed towards a specific goal (ex: winning at chess, performing a specific task). The algorithm drives towards maximizing chances of achieving the specific goal and uses feedback to build the most efficient path to the objective.

- Deep Learning employs techniques that mimic the decision processes that human brains employ (neural networks). Example data is used to train the algorithm.

However, the learning can be supervised or un-supervised but always employs multiple levels of learning to extract more features in the raw input data. Since deep learning processes information in a similar manner as a human brain does, it finds application in tasks that people generally do. It is the key technology behind driverless cars, that enables them to recognize a stop sign and to distinguish between a pedestrian and lamp post.

Sophisticated AI often encompasses not just machine learning but also other elements of typical human intelligence like natural language processing, reasoning, and planning. These are particularly relevant in robotics and physical machines that interact with humans.

Figure 1: Relationship between artificial intelligence, machine learning, and deep learning

Determining the level of AI needed for an application

AI is a vast field with many variants, techniques, and applications. Performing tasks in known and unknown circumstances with structured and unstructured data inputs require different degrees of AI sophistication. The table below summarizes common scenarios where AI is more pertinent. Using the right AI approach can optimize resources, time, and achieve the right outcomes.

| AI Sophistication/ Technique | When to use | When not to use |

|---|---|---|

| Simple Algorithms |

• Determine a target value by using simple rules, computations, or predetermined steps that can be programmed without needing any data-driven learning. |

• Large scale, non-deterministic outcomes, involving many factors. |

| Machine Learning |

• Tasks cannot be adequately solved using a simple (deterministic), rule-based solution. |

• Solving low-complexity problems |

| Deep Learning |

• A large training data set is available – hundreds of thousands or millions of data points to enable the system to train itself. |

• Small datasets. |

| Artificial Intelligence |

• The cost of slow decisions is high. |

• Small training datasets, low compute resources. |

AI applications in IoT and embedded infrastructure

In the last two decades, the internet of things (IoT) has undergone a hyper-growth cycle. Devices large and small are now connected to the network in a variety of arenas. Industrial, commercial, public, and residential settings all have devices that are driven by connectivity. Underpinning this connectivity is cloud infrastructure, communications networks, and edge devices. Thermostats, security cameras, sensors, etc. all collect immense amounts of structured and unstructured data that needs to be analyzed for insight. The sheer amount of data and the varied nature of the data make a strong case for the application of AI techniques to derive insight. Facial identification for security, predictive maintenance of factory infrastructure, and other such applications employ AI to enhance human productivity and save costs. Until recently, the high computing resource intensity of AI processing led to AI techniques being largely deployed in the cloud. Data was collected and archived in the cloud and AI analytics were performed separately on enterprise appliances or telecom edge processors. Traditional local devices at the edge of the network did not have the capacity or power to house AI computing arrays.

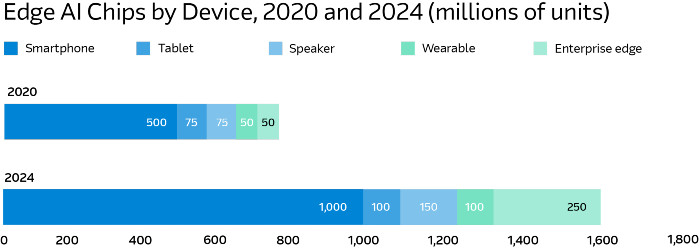

New generation edge AI chips are now bringing AI to the edge. AI chips are physically smaller, inexpensive, operate with less power, and generate much less heat. Given these characteristics, they are now being integrated into edge devices like smartphones, kiosks, robots, and industrial appliances. By enabling these devices to perform processor-intensive AI computations locally, edge AI chips reduce or eliminate the need to send large amounts of data to the cloud and improve speed and data security.

Figure 2: The Edge AI market is expected to grow at a CAGR of ~21% from 2020 to 2024

(Source: MarketsandMarkets and Deloitte Insights)

While some applications rely on data processed by a remote AI array especially when there is simply too much data for a device’s edge AI chip to handle, in many applications a hybrid implementation with a mix of on-device and cloud processing is beneficial. Biometrics, augmented and virtual reality, fun image filters, voice recognition, language translation, voice assistance, and image processing are just a few applications with AI at their core. While these applications can be driven by processors without an edge AI chip, or even in the cloud, they work much better, run much faster, and use less power (thereby improving battery life) when performed by an edge AI chip.

In addition to being relatively inexpensive, standalone edge AI processors have the advantage of a small form factor. Edge AI chips from Nvidia, Qualcomm, NXP, and ST Microelectronics can range from being as small as a USB stick to the size of a card deck and draw low power of 1 to 10 watts. Effective deployment of edge AI can reduce the need for very expensive data center clusters that often require hundreds of thousands in investment. The advent of Tiny machine learning (Tiny ML) approaches also aids in driving more AI analytics at the edge. TinyML encapsulates a capable subset of machine learning technologies capable of performing on-device analytics of sensor data at extremely low power. This is very useful in industrial settings and is expected to drive further AI proliferation at the edge.

Summary

AI is everywhere and is bringing dramatic improvements in human productivity. Finding insights in data requires the application of a variety of AI techniques. These techniques are mostly based on machine learning. Machine learning involves a host of approaches that help computers discern patterns in data and perform tasks without human supervision. Large data sets and highly non-deterministic outcomes are ideal for AI applications. IoT and embedded computing at the network edge is one such application. Millions of edge devices collecting data can now benefit from AI insights right at the edge. AI chip hardware and TinyML together are now ushering in a new era of computing where it is possible to run increasingly complex deep learning models directly on microcontrollers at the edge.

With chips such as these in the works, edge AI can open many new possibilities for enterprises, particularly in IoT applications. Using edge AI chips, companies can greatly increase their ability to analyze—not just collect—data from connected devices and convert this analysis into action, while avoiding the cost, complexity, and security challenges of sending huge amounts of data into the cloud. Arrow’s broad ecosystem of hardware, software, cloud, and services enables customers to develop AI applications from the edge to the cloud.

References

- AI vs. Algorithms: What's the Difference?

- AI, ML, and DL: How not to get them mixed!

- Bringing AI to the device: Edge AI chips come into their own

- Why TinyML is a giant opportunity