The world is fast moving to a phase where we expect ‘smart’ everything. It started a few years ago with talk just about smart cities, but more recently there’s a finer granularity, with both technical and non-technical people exploring areas like smart mobility, smart energy, smart factories, smart agriculture and smart homes.

Smart Everything is Being Fueled by AI-enabled Embedded Vision

Initially the smart element was based on analyzing the sensor data for key parameters – whether it was temperature, humidity, vibration or some other environmental factor. However, advances in computing power, especially at the edge of networks, has meant that the vision element is also being added, just because the images can now be interpreted using deep learning, one part of what is generally labelled as artificial intelligence (AI).

Not only that, but the huge progress that has been made in advanced driver assistance systems (ADAS) and connected autonomous vehicles has meant that vision systems and AI have made huge impact in many other industries in addition to automotive. One example is Israeli startup Vayavision, which earlier this year launched a software-based autonomous vehicle environmental perception engine which upscales raw data from camera, lidar and radar sensors to provide what it claims is a more accurate 3D model than object fusion-based platforms.

CEO Ronny Cohen told EE Times that today’s object-led fusion of sensor data is not reliable and can lead to objects being missed. Roads are full of unexpected objects that are absent from training data sets, even when those sets are captured while travelling millions of kilometers. He said most current generation autonomous driving solutions are based on object fusion, in which each sensor registers an independent object, and then reconciles which data is correct. This can provide inaccurate detections and result in a high rate of false alarms, and ultimately accidents.

Embedded Vision Is Major Driver of AI

In a WIPO (World Intellectual Property Organization) technology trends report on artificial intelligence published in January 2019, among AI functional applications growing more rapidly than others, it identified computer vision, which includes image recognition, as the most popular. Computer vision is mentioned in 49 percent of all AI-related patents (167,038 patent documents), growing annually by an average of 24 percent (21,011 patent applications filed in 2016). Those AI functional applications with the highest growth rates in patent filings in the period 2013 to 2016 were AI for robotics and control methods, which both grew on average by 55 percent a year.

The combination of deep learning and computer vision are likely to have a huge impact in many application areas, including industrial, agriculture, medical imaging and surveillance, to name just a few. The industrial machine vision systems market is projected to reach US$14.11 billion by 2025, growing at a CAGR of 7.4% from 2018 to 2025, according to an estimate from Verified Market Research.

Industrial vision systems comprise automatic image capturing, evaluation and processing capabilities, based on digital cameras, back-end image processing hardware and software. Applications in industrial include positioning, identification, verification, vibration and flaw detection. Integration of embedded vision systems into robotics is especially gaining strength, not just in industrial and manufacturing but also consumer, military, healthcare and government.

AI Vision to Guide Derelict Satellites

There’s no limit to the scope for embedded vision. Stanford University professor of aeronautics and astronautics, Simone D’Amico University, is looking at developing an AI-powered navigation system to direct spaceborne ‘tow trucks’ designed to restart or remove derelict satellites circling aimlessly in graveyard orbits. His Space Rendezvous Lab (SLAB) is working with the European Space Agency (ESA) to spur development of an AI system to direct the orbital equivalent of a tow truck. They launched a competition for an AI system that would identify a derelict satellite and, without any input from Earth’s assets, guide a repair vessel to navigate alongside to refuel, repair or remove it.

The navigation system D’Amico has in mind would be inexpensive, compact and energy-efficient. To spot defunct satellites, the repair vehicle would rely on cameras that take simple gray-scale images, just 500-by-500 pixels, to reduce data storage and processing demand. Barebones processors and AI algorithms that come out of the competition would be integrated directly into the repair satellite. No ground communication would be required. The goal is simplicity: processors and algorithms that require low-resolution images and limited computation to navigate space. Essentially, the spacecraft would have to able to see and think for itself.

Keeping Forestry in Check

Bringing things back to earth, forestry and agriculture is an area where embedded vision and AI comes into its own. Countries like New Zealand have long been utilizing IoT technology to improve agricultural operations and yield.

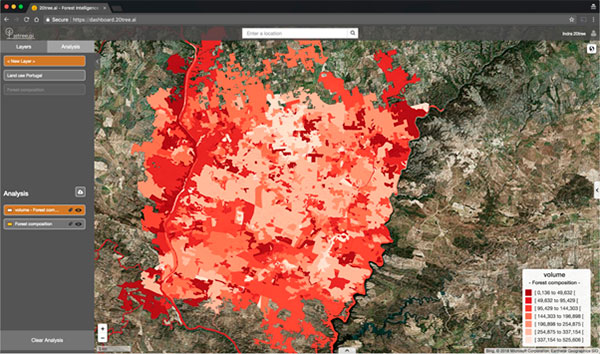

The addition of vision capability and the ability to analyze images adds a new dimension. Portugal-based 20tree.ai, part of NVIDIA’s Inception startup program, is combining AI and satellite imagery to analyze entire forests in a fraction of the time currently required. Using GPUs running in-house and in the cloud via AWS and Google, it feeds satellite data into its proprietary deep learning algorithms to creates highly accurate insights and reveal patterns not visible for the human eye. The total amount of satellite data that is captured on a daily basis exceeds 10s of terabytes, so the ability to make sense of that data using massive computing power is essential if it is going to be of any use for both non-governmental as well as commercial organizations.

Crowd Counting and People Recognition

Part of the proposition of vision and AI systems in smart cities is people counting and crowd monitoring. Not even getting into the issues of privacy (which is another debate altogether), one of the challenges here is how to accurately count number of people in still images – for example, with issues like occlusion, where images contain only partially visible people; and handling different scale, where people in near and far field of sight need to be taken into account.

Arrow Electronics’ company eInfochips has developed a new 96boards based on NXP’s latest i.MX8M Quad Arm Cortex A53 with Neon instructions and Arm Cortex M4 core; Arrow’s engineering solution center software team have ported and updated the development of its edge AI machine vision deep neural network that runs on this new board. It executes a multi-column deep neural network consisting of multiple convolutional neurons with pooling and ReLU activation functions. The network is trained using the ShanghaiTech dataset that is openly available.

This type of solution has application in multiple areas, such human flow monitoring and traffic control as part of a smart city solution or at airports, and for overcrowding avoidance to ensure safety for events. Another potential application is in smart retail – to identify customer points of interest.

Ready-Made Modules With Workstation Performance for the Edge

For deployment of advanced AI and computer vision to the edge, NVIDIA has an embedded module solution for developers, the Jetson AGX Xavier. This can enable robotic platforms in the field with workstation-level performance with the ability to operate fully autonomously without relying on human intervention and cloud connectivity. Intelligent machines using these modules can be developed to freely interact and navigate safely in their environments, unencumbered by complex terrain and dynamic obstacles, accomplishing real-world tasks with complete autonomy.

This can include actions like package delivery and industrial inspection that require advanced levels of real-time perception and inferencing to perform. The Jetson AGX Xavier module delivers GPU workstation class performance with 32 TeraOPS (TOPS) of peak compute and 750Gbps of high-speed I/O in a compact 100x87mm form-factor. Users can configure operating modes at 10W, 15W, and 30W as needed for their applications. This gives it the performance to handle visual odometry, sensor fusion, localization and mapping, obstacle detection, and path planning algorithms critical to next-generation robots. Figure 1 shows the production compute modules now available globally. Developers can now begin deploying new autonomous machines in volume.

This and other AI-enabled embedded vision solutions will be in abundance at the Embedded World conference in Nuremberg, Germany, and Arrow Electronics will be showing several of the solutions mentioned in this article.

Image: 20tree.ai uses AI and NVIDIA GPUs to analyze satellite images of forestry and other spaces (Source: 20tree.ai)

Image: The NVIDIA Jetson AGX Xavier embedded compute module with thermal transfer plate (Image: NVIDIA)